Challenges and Solutions in Moving AI Agents from PoC to Production.

Modern customer services increasingly uses generative AI to build chatbots and virtual assistants that understand natural language and provide instant help. AWS notes:

“Enterprises worldwide are deploying generative AI-powered chatbots, virtual assistants, and IVR (Interactive Voice Response) systems to solve queries faster, automate tasks, and elevate business performance”

These LLM-based agents integrate with contact centers or apps to answer FAQs, schedule appointments, summarize conversations, and perform routine tasks. By combining LLM understanding with contextual knowledge sources and business rules, these agents provide 24/7 support across channels (chat, voice, email, etc.) while reducing human workload.

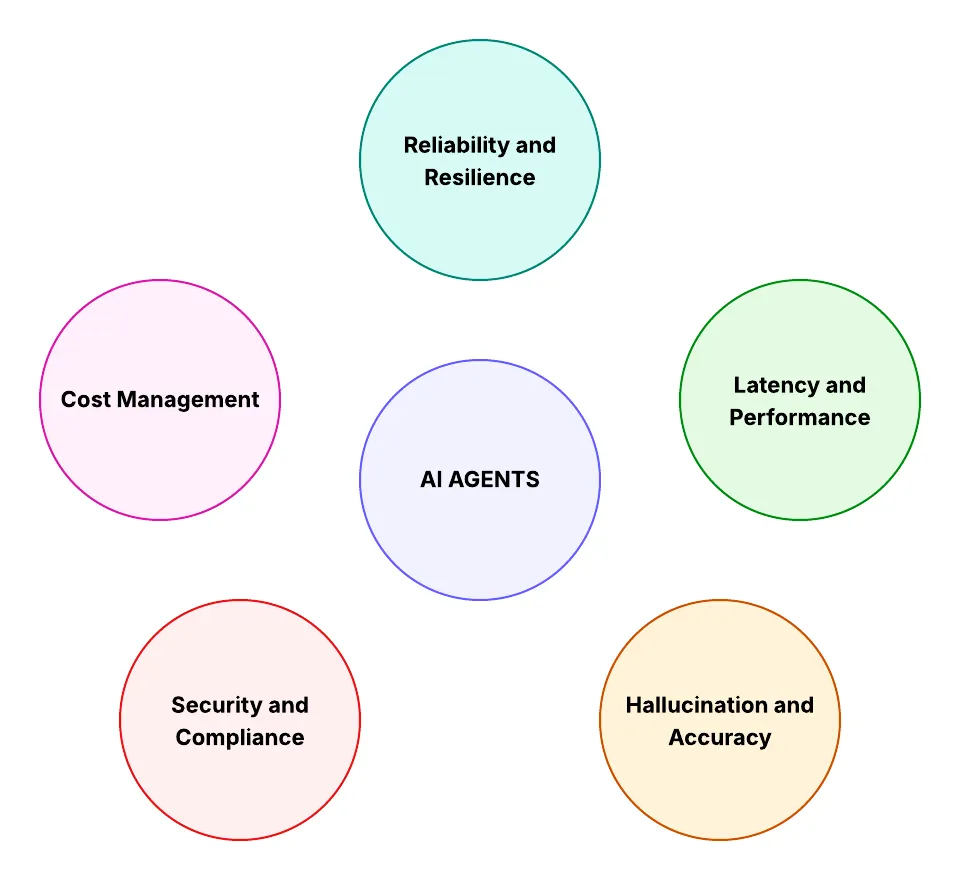

Challenges in Moving from PoC (Proof of Concept) to Production

Figure 1: Challenges in Moving from PoC to Production

Deploying LLM agents reliably at scale brings several challenges:

1. Reliability and Resilience

LLMs may behave unpredictably under real-world conditions. Agents must handle failures gracefully (e.g., timeouts, API errors, etc.) and maintain availability.

Best practices involve treating observability and fault tolerance as first-class concerns by:

-

Profiling agent execution (using AWS X-ray, Amazon CloudWatch, LangSmith, etc.) to identify bottlenecks in reasoning, tools, or external services.

-

Wrapping agent invocation in retry loops or fallback logic:

- If the agent fails or returns incomplete results, handle the exception gracefully.

- Optionally return a default answer or a message indicating delayed response.

-

Defining timeouts for tool calls to prevent hanging processes.

-

Limiting the number of reasoning loops to avoid “runaway” behavior.

-

Instrumenting every step of the agent’s reasoning with metrics, logs, and traces so problems can be detected and diagnosed.

-

Using monitoring systems (e.g., LangSmith, Langfuse, etc.) to collect:

- Latency

- Token usage

- Cost per request, etc.

-

Setting up alerts for anomalies in these metrics.

2. Latency and Performance

Agents in production must balance responsiveness with performance. High latency impacts user experience, while inefficient performance increases costs and limits scalability.

Best practices include:

-

Implementing streaming outputs for long-running tasks (token-by-token responses) to reduce perceived wait time.

-

Utilizing prompt caching to store and reuse frequently used context in prompts across multiple model invocations. This approach can reduce latency by up to 85% and costs by up to 90% for supported models in Amazon Bedrock.

-

Implementing response caching to store and reuse model outputs for identical queries, decreasing latency and reducing the need for repeated calls to LLMs.

- AWS semantic cache solution returns answers in “less than 1 second” for strong matches, greatly cutting response time. This read-only semantic cache acts as an intelligent intermediary layer between the user and Amazon Bedrock, storing curated and verified question-answer pairs. When a user submits a query, the solution first evaluates its semantic similarity with existing verified questions in the knowledge base. For highly similar queries (greater than 80% match), the solution bypasses the LLM completely and returns the curated and verified answer directly. When partial matches (60–80% similarity) are found, the solution uses the verified answers as few-shot examples to guide the LLM’s response, significantly improving accuracy and consistency. For queries with low similarity (less than 60%) or no match, the solution falls back to standard LLM processing, making sure that user questions receive appropriate responses.

3. Hallucination and Accuracy

LLMs can produce fluent but incorrect or nonsensical answers (“hallucinations”). This is critical in customer service (or finance/health domains) where misinformation erodes trust or causes harm.

Mitigations include:

-

Grounding the model with domain data (RAG), Internet Search, and validating outputs.

-

Utilizing advanced prompting techniques: Employ methods like chain-of-thought prompting to guide the model through intermediate reasoning steps, reducing the likelihood of generating hallucinations.

-

Implementing confidence scoring: Develop mechanisms to assess the confidence level of LLM outputs. Low-confidence responses can trigger fallback procedures, such as requesting human verification or providing a disclaimer.

4. Cost Management

Running large language models (LLMs) can be expensive. Production systems must minimize unnecessary calls to optimize both cost and performance.

Solutions include:

-

Setting up a read-through caching mechanism using services like Amazon OpenSearch Serverless, where the system checks the cache before making a model call. If the answer is not cached, it queries the model, stores the response, and serves future requests from the cache.

-

Monitoring token usage per request and setting usage alerts (e.g. via CloudWatch, etc.) as part of cost control.

-

Choosing smaller or more efficient models when high volume or low latency is critical.

5. Security and Compliance

Agents often handle sensitive data and must comply with regulations. Enterprise deployments require strong controls to mitigate risks associated with data breaches, unauthorized access, and regulatory non-compliance.

Solutions include:

-

Data Encryption: Implement strong encryption protocols such as AES-256 for data at rest and TLS for data in transit to protect sensitive information from unauthorized access.

-

Data Sanitization: Always sanitize and validate any data that enters an AI agent. Implement AI firewalls with strict input whitelisting to detect malicious or malformed inputs.

-

Access Controls: Enforce the principles of least privilege and need-to-know. Regularly audit and recertify AI agent access to tools, databases, and third-party APIs.

-

Medical Data Protection Guardrails:

-

Implement data protection and compliance guardrails to ensure sensitive data, such as medical claims, is properly masked.

-

Apply dynamic masking or anonymization techniques before inputting data into models and while storing data as logs.

-

Establish Business Associate Agreements (BAAs) with third-party vendors who handle Protected Health Information (PHI) to ensure they comply with HIPAA regulations.

-

-

Safe AI Guardrails:

- Ensure safe AI practices by enforcing content filters and alignment mechanisms so that AI generates helpful, harmless, and unbiased outputs.

AWS Strands for Dependable, Production Deployment

Figure 2: AWS Strands Agents

Strands Agents is an open-source SDK released by AWS for building autonomous AI agents. It takes a model-first approach: the LLM’s reasoning powers the flow, rather than hardcoded logic.

Developers define an agent by giving it a system prompt (the agent’s “role”) and a set of tools (APIs or functions) it can call. The Strands framework handles the agent loop: at each step the LLM considers context, decides on an action (possibly calling a tool), incorporates the result, and repeats until done.

This model-driven design minimizes boilerplate code while enabling sophisticated multi-step reasoning. Strands was designed for enterprise production use. It provides built-in support for observability, orchestration, and integration.

Let’s explore how AWS Strands addresses the common challenges of turning PoCs into production-grade LLM agents:

1. Reliability and Resilience

-

Observability: Strands has built-in OpenTelemetry instrumentation, allowing tracing of every model and tool call. Metrics such as tool usage, loop counts, latency, and error rates are automatically collected.

-

Error Handling: Strands supports structured error handling and retries. Agents can be configured with loop limits and timeouts to prevent runaway or stuck processes.

-

Fallbacks and Escalations: When a model fails to produce a valid output or exceeds thresholds, agents can gracefully fall back to default responses or handoff to a human operator.

-

Auditability: All interactions are traceable for compliance and debugging, critical for enterprise-grade reliability.

2. Latency and Performance

-

Serverless and Containerized Options: Strands can run on AWS Lambda for quick, stateless calls, or AWS ECS/Fargate/EKS for long-running, streaming, or high-concurrency workloads.

-

Streaming Responses: Token-by-token streaming reduces perceived latency in multi-turn conversations.

-

Optimized Model Use: Integration with Amazon Bedrock allows using smaller or specialized models for routine tasks, reducing inference costs and improving response time.

-

Caching and RAG: Strands supports integration with Semantic Caches and Amazon Bedrock Knowledge Bases, ensuring frequently asked questions are answered instantly without invoking LLMs unnecessarily.

3. Hallucination and Accuracy

-

Grounding Agents: Strands integrate with Retrieval Augmented generation (RAG) pipelines, querying curated knowledge bases or Amazon S3 / Amazon DynamoDB stored content before invoking the LLM.

-

Few-Shot Prompts: Partial matches from the knowledge base can be passed as few-shot examples to guide the LLM, reducing hallucination.

4. Cost Management

-

Efficient Model Selection: Strands allows choosing models dynamically based on workload, cost, or latency considerations.

-

Caching Strategies: Using verified caches reduces unnecessary calls to expensive foundation models.

-

Monitoring Usage: Built-in metrics track token usage, tool calls, and execution time, enabling proactive cost control.

5. Security and Compliance

-

Controlled Tool Access: Strands allows developers to explicitly define which tools an agent may call, preventing arbitrary or unsafe operations.

-

Secure Deployment: Agents can be deployed behind API Gateway with IAM or Cognito authentication. Sensitive data can be encrypted with KMS.

-

Data Handling: Persistent state (sessions, memory) can be securely stored in DynamoDB or S3, with encryption and fine-grained access control.

-

Audit and Governance: Integration with AWS Audit Manager and Bedrock Guardrails ensures compliance with privacy, accuracy, and regulatory standards (e.g., HIPAA, PII, PHI).

By combining these capabilities, AWS Strands transforms PoCs into production-grade LLM agents that are reliable, efficient, secure, observable, and cost-effective, meeting enterprise standards and other mission-critical applications.